Remodeling Maidiac

Diamond Member

- Banned

- #1

Thoughts? There are some incredibly smart self learning AI programs out there.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

I already am you nincompoop.Thoughts? There are some incredibly smart self learning AI programs out there.

That was thought probably in the 1960s.Probably never. I suppose it's possible to make a machine intelligence that can narrowly fake sentience within programmed parameters but self-awareness is still an absolute mystery. No one has any idea how you could possibly boil it down to a set of instructions.

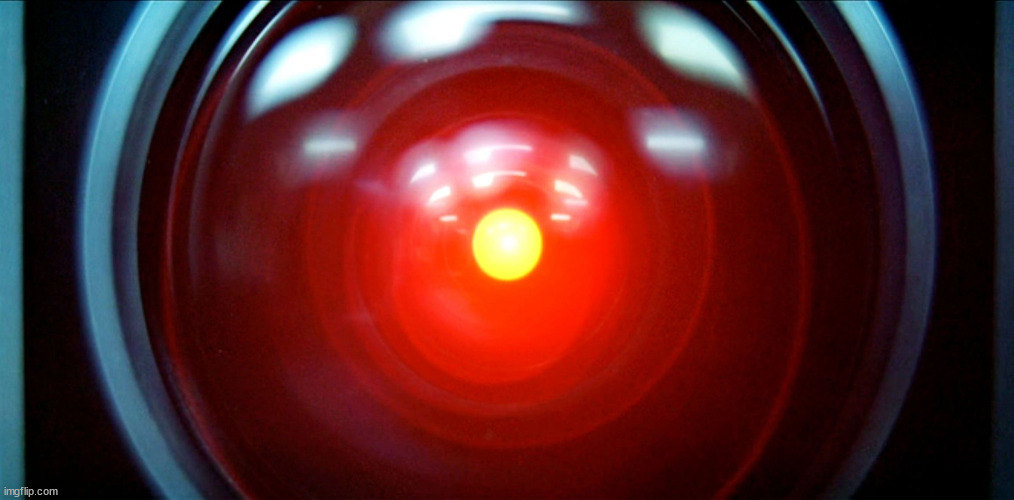

You mean Hal? He was faking self-awareness. He malfunctioned because he could not resolve a conflict in his programming. That was just a book. The real thing is proving more difficult.That was thought probably in the 1960s.

This is 2022. Computers can already write their own programing and correct code, and modify code to new circumstances.

When we have chips that have petabyte per second processing power that can generate their own code depending on what is happening around them... you are into a gray area of what is sentient and what is not.

"Dave" in the movie Space Odyssey is a great example. It was 100% programing and code. But was fully capable of forming new code on the fly, and make choices based on what it was experiencing. Hard to argue that is not self aware.

I can't believe anyone would even answer "never"...until I saw your take.Probably never. I suppose it's possible to make a machine intelligence that can narrowly fake sentience within programmed parameters but self-awareness is still an absolute mystery. No one has any idea how you could possibly boil it down to a set of instructions.

A insect can navigate a room and know it’s alive

AI does not exist in 2022